NetLog: Chrome’s network logging system

Eric Roman & Matt Menke

Overview

NetLog is an event logging mechanism for Chrome’s network stack to help debug problems and analyze performance. It enables a “capture → dump → analyze” style of workflow similar to tools like tcpdump. Here is a typical use case:

- User enables network logging

- User reproduces a problem

- User uploads the log file to a bug report

- Developer analyzes the log file to see what happened

Under the hood, Chrome’s network stack has been instrumented to emit events at various interesting trace points. This event stream can be watched by observers to do interesting things with the data. Some of Chrome’s NetLog observers are:

- (file_net_log_observer.cc) Serializes the event stream to a JSON file that can be loaded by the Catapult NetLog Viewer.

- (net_internals_ui.cc) [Removed in 2018]

Forwards events to the JavaScript application chrome://net-internals. This app can visualize the data and also export it to a file

Goals of NetLog

- Net Logging is off by default

- Net Logging does not affect performance when off

- Net Logging support ships with official builds of Chrome

- Events are easily serialized/deserialized to disk

Non-Goals

NetLog is intended solely for logging. Features needing reliable network information should never be built on top of NetLog. It may be tempting to add an observer of NetLog as a quick hack to glean some internal state (since you circumvent having to create a proper interface and plumb it deep into the network stack). But that is wrong, and it will break. Instead, you should add an interface to NetworkDelegate, complete with regression tests and documentation. Think of NetLog as a fancy way of writing:

LOG(INFO) << “Some network event; bla bla bla”;

It would be silly if any feature broke simply because you moved around such a logging statement or changed its format slightly! Similarly, we want net logging to be easy to add/edit so developers can instrument problem areas as needed.

Terminology

There are a couple of terms Chrome engineers use when talking about this domain. They sound similar but have slightly different meanings!

- “netlog”: The C++ mechanism that exposes the network event stream (in particular, net::NetLog).

- “net internals”: This refers specifically to the chrome://net-internals application. It used to be a visualizer for the network event stream. This is also called “about net-internals” verbally (in reference to the URL about:net-internals).

- “netinternals dump”, or "netlog dump": A JSON file containing a

serialized event stream. The usual way to create these dumps is

using the export functionality on chrome://net-export. However dumps

can also be generated by launching Chrome with the command line flag

--log-net-log=*FILENAME*.

Structure of a NetLog event

On the C++ side of things, events are represented by a net::NetLog::Entry. This in-memory representation is intended to be easily serialized to JSON.

We try not to serialize events to JSON until it is absolutely necessary (most

of the time there are no consumers of the events so we don't have to). When we

do need to serialize the events, this is the JSON format that we use:

| Field | Type | Description |

| time | string | The time in milliseconds when the event occurred.

This is a time tick count and not a unix timestamp. However it is easily convertible given the time tick offset (time ticks are independent of system clock adjustments, guaranteeing that our timestamps don't go backwards) Another oddity is that despite being a numeric quantity, we encode time as a string. This is to avoid precision loss due to Chrome’s JSON stringifier. |

| type | number | The ID of the event type. These are enumerated in net_log_event_type_list.h |

| source | object | This field identifies which entity emitted the event. For instance this might identify a particular URLRequest.

The event stream is a flat sequence of events, which has intermixed starts and completions for all sorts of asynchronous events (potentially happening across multiple threads). The usefulness of source is to be able to group these events into logical blocks with a serial control flow. The source object itself is comprised of two sub-fields “id” and “type”. The “id” is unique across all types. The type field is included as a convenience so that processing the event stream can be done in a stateless manner. The list of possible source types is enumerated in net_log_source_type_list.h |

| phase | number | This enumeration can be one of BEGIN, END, NONE.

Let's say you wanted to log the duration of a URLRequest by logging the start and then the end: One way to do that would be to define two event types: To address this, we prefer to define a single event type, say ( |

| params | object | This is an optional field.

When provided, it represents event-specific parameters. For example, when starting a URL request, we smuggle the load flags, URL and priority of the request into the params structure. The visualizer knows how to pretty print the parameters for certain event types. For everything else it just dumps the JSON in a readable fashion. |

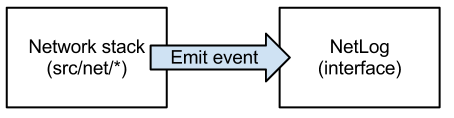

How network events are emitted

net::NetLog is the interface for emitting NetLog events.

Code throughout src/net/* that needs to emit network events must be passed a

pointer to a net::NetLog to send the events to.

Most commonly, this net::NetLog dependency is passed via a net::BoundNetLog parameter rather than directly as a net::NetLog*. This is a wrapper to “bind” the same source parameter to each event output to the stream. You can think of it like creating a private event stream for a single entity. Read more about this in net_log.h

Ultimately though, net::NetLog is just a boring interface and doesn’t actually do anything.

In the Chrome browser, the concrete implementation of net::NetLog used is ChromeNetLog. We configure things so that all network logging events for all browser profiles flow through a single instance of ChromeNetLog. ChromeNetLog is responsible for forwarding this event stream on to other interested parties via an observer mechanism.

As was alluded to earlier in the overview, FileNetLogObserver is implemented as one such observer (which serializes the network events to a JSON file).

How custom parameters are attached to events

Custom parameters are specified by a base::Value*. Value is used to represent a hierarchy of nodes, that maps directly into JSON; it has all the expected building blocks -- dictionaries, strings, numbers, arrays. See values.h for more details.

For the sake of efficiency, you do not directly create a base::Value* when emitting events. Rather, you pass in a Callback which knows how to build the Value*, in case one is needed.

This decoupling allows deferring the creation of Values until really necessary. This is good since, in the common case when not exporting events, we simply don’t need that data. Creating the custom Value parameters is not free since it involves copying internal state into a new Value* hierarchy.

This makes it easy to piece things together without needing to define helper structures! You are guaranteed that the parameter callback will only be invoked synchronously before the return of the logging function.

Here is an example of how to emit an event with custom parameters:

net_log_.BeginEvent(

NetLog::TYPE_URL_REQUEST_START_JOB,

base::Bind(&NetLogURLRequestStartCallback,

&url(), &method_, load_flags_, priority_));

By the time BeginEvent returns, NetLogURLRequestStartCallback() will have been invoked if-and-only-if the Value* parameter was needed. That is why it is safe for the callback to take pointers to url() and method_ (pointers are preferred in this case to avoid making unnecessary copies).

Here is what the bound function might look like:

Value* NetLogURLRequestStartCallback(const GURL* url, const std::string* method, int load_flags, RequestPriority priority, NetLog::LogLevel) {

DictionaryValue* dict = new DictionaryValue();

dict->SetString("url", url->possibly_invalid_spec());

dict->SetString("method", *method);

dict->SetInteger("load_flags", load_flags);

dict->SetInteger("priority", static_cast<int>(priority));

return dict;

}

What does NetLog actually log?

The logging is subject to change, but in general we try to log whatever is useful for debugging. Admittedly that description isn’t very helpful. … let me give some examples of what we currently log:

- Queueing delay to schedule DNS resolves to threads

- Stalls due to exceeding socket pool limits

- Attempts to do a TCP connect to an IP address

- Speculative DNS resolves

- Proxy resolution

- Cache hits for DNS resolves

- Reads/writes from disk cache

- Network change events

- Proxy configuration change events

- Stalls due to Chrome extensions pausing requests

- Errors

The sorts of events emitted are application specific. NetLog does not aim to replace lower-level network tools like packet captures (i.e. tcpdump). Rather it focuses on application level logic and caches which cannot possibly be known at the lower layers. There is some overlap though, since NetLog can optionally capture bytes sent/received over sockets, as well as the decrypted bytes for secure channels.

chrome://net-internals (aka about:net-internals)

Until 2018, net-internals was visualizer for the NetLog event stream. It can be used both in real-time, and also to load up post-mortem NetLog dumps. Most of the data displayed by net-internals comes from the NetLog event stream. When you load about:net-internals it installed an observer into ChromeNetLog (inside the browser process) which serializes network events to JSON and sends them over IPC to the renderer running about:net-internals application. about:net-internals is itself a JavaScript application, whose code lived under src/chrome/browser/resources/net_internals/ Some of the other data displayed by net-internals comes by polling other state functions. For instance the “DNS cache” listed on about:net-internals is obtained by polling the browser for the full list, rather than by an event-based mechanism.

In 2018, the NetLog Viewer was removed from Chrome and moved to an external repository: https://github.com/catapult-project/catapult/tree/master/netlog_viewer. The move was made for a number of reasons, including reducing installer size and attack surface. Perhaps most importantly, WebUI-run JavaScript is subject to many tight restrictions (e.g. no external libraries) which made it hard to improve and maintain. As an external application running in an untrusted web context, these restrictions can be relaxed.

Limiting Log Size

FileNetLogObserver::FileWriter explains the implementation of the basic "circular" log: To accommodate the request for a size-limited JSON file, instead of writing a single JSON output file initially, Chrome instead creates a folder full of event-containing JSON fragment files (10 by default) that are overwritten on a least-recently-written basis. When the capture is complete, Chrome will stitch the partial files together, including a "prefix" and "end" file containing the constants and other non-event data.

NetLogExporter::CreateScratchDir's function uses scratch_dir.CreateUniqueTempDir() to choose the target location.

History: the evolution of NetLog/NetInternals

version 1: LoadLog

In the beginning, there was LoadLog. This was basically a small per-request log buffer. Each URLRequest had its own LoadLog member field, which was essentially a std::vector of events and their timings. These events got accumulated directly in the browser process. In other words, it was a form of always-on passive logging. My (eroman) original use case for LoadLog was to diagnose performance problems. At the time, internal users were complaining to me about sporadic performance issues. But of course they would never reproduce them when I was around! I wanted a system that could dump the relevant information reliably AFTER the problem had already happened.

To capture the information I added LoadLogs to URLRequests to track the performance of core operations like DNS resolving and proxy resolution. This structure was really just a small set of event timings. To expose the data, I created a very simple webapp at “about:net-internals”. This page was entirely generated by C++, and believe me when I say it was basic! Since LoadLogs were attached to particular URLRequests, the visualizer would work by traversing the list of in-progress requests and subsequently printing its logs. I also kept a “graveyard” of recently finished requests in the browser so we would have some data on recently finished requests, (which ultimately was my original objective). Since about:net-internals was a static page, you would have to reload it to view new data. You would reload at the risk of losing the data it was currently displaying, since the data was backed by the browser process and might have already been evicted from the circular graveyard buffer. The exchange format for users to send me their logs was ad-hoc. Essentially they would save the HTML page, often by using the “save webpage as” feature, or simply copy-pasting. (Some users would even print-to-pdf and attach that). Due to the limitations of the C++ generated HTML formatting, this first version of “about:net-internals” used a fixed-width text format for visualizing logs. This is in fact the same cryptic display (with few changes) that is used today. (When I ported that code from C++ to Javascript I left a TODO to improve it later...) You might be wondering about the URL... why not simply call it “about:net”? Well, at the time there was already a page running at “about:net”! So I added the suffix “-internals” to distinguish them. Moreover, at the time I just wanted to build something quick and dirty and did not want to go through any UI reviews. By calling it “-internals” I was assuring people that it was just a janky developer page that didn’t need to meet our higher standards for end-user content. Interestingly “about:net” got deprecated when porting Chrome to Mac/Linux (since it relied on native UI which no one wanted to port). We eventually deleted it, but by the time that happened about:net-internals was already a well established URL so I saw no point in changing it. Today, ChromeOS has re-appropriated the url about:net.

version 2: NetLog

I wanted to do more with about:net-internals. However the fact that it was entirely generated via C++ made it very difficult to work with. Formatting HTML from C++ is no fun, and the code quickly devolves into something terrifying. That last thing I wanted to be adding to Chrome was a pile of ugly C++ code. Since webui pages were all the rage at the time (this was around the time of one of the “new tab page” rewrites), my solution was to re-write about:net-internals in JavaScript. I started doing this, and created the experimental page “about:net2” for my in-progress work. These two pages coexisted for a while since it was just a side project for me. But eventually when it was good enough, I would rename about:net2 to about:net-internals. The requirement to use JavaScript motivated the evolution of LoadLog into NetLog. The problem with LoadLog was the data was siloed by request. This meant my webapp couldn’t receive real-time updates. Rather it would have to do gnarly polling to see what had changed (and suck down all the data each time). Another issue that scared me was the browser-side accumulation of data being done with LoadLogs. When tracing I wanted to turn on higher logging granularity, but this came at the risk of using up lots of browser memory, which at the extreme might kill the process. To address this, I decided to generalize the mechanism of a per-request events into a single flat event stream. This gave me a nice choke point for transferring updates to the net-internals app. It also meant that the accumulation of data was done in a renderer process, so at worst a memory exhaustion would kill just that single tab. However this new approach also created some new problems. First of all, having a flat event stream meant needing to re-build the structure within each consumer of the NetLog. This is why the “source” parameter exists in events today. Since this is inefficient, I tried to keep browser-side interpretation of NetLog events to a minimum, and leaving this task to the visualizer. The second issue was how to maintain feature parity with LoadLog’s ability to do “passive logging.” To support passive logging (i.e. “always on logging”) I introduced the PassiveLogCollector observer. PassiveLogCollector was an observer that would watch the event stream and save recent events into a circular buffer (using a variety of heuristics to try and keep the most relevant information, clustered by request). That way if something went wrong, we would have a partial log showing what happened most recently. Getting the heuristics for PassiveLogCollector good-enough was non-trivial, since it required re-building and tracking the structure for the events. Initially PassiveLogCollector worked as promised. But it didn’t scale well over time, and has since been removed. The problem is that over time the amount of net logging grew substantially (success!), and also the event stream became more fragmented into sources (due to more overlapping async stuff). The tension with PassiveLogCollector was wanting to collect enough useful information to be able to debug problems, while at the same time not having fragile heuristics which could break at any moment leading to browser memory bloat. Ultimately I wasn’t comfortable with the risk of bad heuristics leading to bloat, and how it complicated adding new logging, and hence removed it. Some of the gaps left by the removal of PassiveLogCollector can be addressed by issue 117026.

version 3: The age of mmenke

The current evolution of about:net-internals is what I would call v3. It starts when Matt Menke teamed up with me to work on net-internals. Prior to the golden age of menke, exchanging NetLogs involved copy-pasting the webpage! This was a hassle, since invariably users would paste the useless parts of the log and not the whole thing, or provide screenshots rather than text... It was downright embarrassing. Matt added the ability to save logs to a JSON file, and to import them back again into about:net-internals, which was a huge step forward. Other core features of v3 were unittests for the JavaScript frontend, and a timeline visualization to plot network metrics both in real-time and from postmortem dump files. v3 also got custom views for Sockets, SPDY sessions, Winsock LSPs (SPIs), a slew of other functionality and increased logging sprinkled throughout the network stack. version 4: The extraction of the viewer In 2018, the JavaScript of the viewer application was removed from the net-internals page and extracted to a new repository and running from a public app server. The reasons for this extraction are documented in the NetLog Viewer design document.

version ?:

… patches welcome!